Solaris and Linux – NFS/iSCSI performance

Recently i did a post at opensolaris.org about “make” a comparison, or describe some configurations that have impact on the performance on Solaris and Linux as NFS servers. As far as i know, there are many differences between all NFS server implementations, and between Solaris and Linux is not different. If you are interested take a look at this post to see the whole history…

I’m used to Linux as a NFS server, and it just works. But the world is changing, and the problem is not Linux and NFS, but the filesystem’s administration (XFS, EXT3, etc..). That’s why i’m making some tests with Solaris and ZFS to see what i can get in terms of RAS and what i can loose (if i would) in performance.

But until now, nobody has answer anything, so, i have made some tests with iozone, and here i will put the results and my considerations (right and wrong), about it. Maybe some kernel hacker (Solaris, Linux, NFS, ZFS, XFS), find this post and comment my mistakes.

First of all, this is not an “academic” test of performance, or something like that. I did not reboot all the servers before each test, and did not configure the servers memory with less than or equal to .5*X MB (where “X” is the test file size). If all the reads will be satisfied from the cache, ok, so be it, let’s see how that works (in the iozone paper, i did read that “-U” option should take care of destroy the cache for NFS, i don’t know if for iSCSI or local filesystems that is true too)… I did not create a “special” lab for this either, i have used some “development servers” (idle ones), and if you have read the post, you know that i want to see pratical scenarios, and i want stimulate the critics! :)

p.s: I did two “tests”, one with the default iozone filesizes, and cache intensive. And another test with iozone creating files with 2GB to 4GB, then the cache “must” be eliminated completely.

I’m deploying a iSCSI solution too, and did the same tests just changing the access protocol for the “tested share” from NFS to iSCSI. The NFS protocol is used for “things” that it has been developed to, and for another things that, obviously, it can be used but maybe there are other (better) options. A pratical example is the export of a filesystem or directory that is just for one machine (like the “/var” directory in a diskless client). In such case, all the features (and overhead), that such configuration will have, is not necessary. Another protocol, like iSCSI, is an example of a possible better choice in that scenario.

The main goal of NFS is share a directory for “n” machines (where n > 1). Take a look at this excellent paper, many aspects of the results in my tests were very close to the “concepts” described in that paper.

The “Test environment” is as follow:

Solaris Server:

1)

Software: A Solaris 10 (update 4) as NFS server, and iSCSI target (booting from SAN – EMC).

Hardware: Intel(r) Pentium(r) D CPU 3.00GHz (2x), 4GB RAM.

This server provides a Gentoo GNU/Linux environment for the machine where the tests will run. This server is responsible for the RO and RW Gentoo environment.

GNU/Linux Servers:

1)

Software: A Linux (Debian 3.1) as NFS server (booting from SAN – EMC).

Hardware: Intel(R) Xeon(TM) CPU 3.06GHz (2x), 1GB RAM.

This server provides the RW Gentoo GNU/Linux environment (etc and data).

2)

Software: A Linux (Debian 3.0) as NFS server (booting from SAN – EMC).

Hardware: Intel(R) Xeon(TM) CPU 3.06GHz (1x), 2GB RAM.

This server provides the RO Gentoo GNU/Linux environment (softwares).

The client:

Software: A Gentoo GNU/Linux diskless/NFS (In one test provided by the Solaris server, and on another provided by the combination of the two Linux servers).

Hardware: Intel(R) Pentium(R) 4 CPU 1.70GHz, 1GB RAM.

For the both scenarios, the Gentoo GNU/Linux environment is on a dedicated filesystem, and the “tested share” too (everything on EMC discs).

On the Solaris server the filesystems are ZFS, and XFS (with noatime) on the GNU/Linux servers. The client is not on the same network as the servers (the servers are connected in a Gigabit network, and the client is on a FAST/Ethernet).

p.s: I need to make such test with ZFS configured with noatime too.

So, as you could see, the iozone and all the binaries are read from the NFS servers. The default recommended test for iozone is run it from a local filesystem, and just test a NFS share. Well, we will do it a bit different…

First, i did tests with the client machine booting from the Linux servers (RO and RW), and tested a specific share (on the RW server), with different export options (sync/async). The results are the files sync and async. All tests were executed twice, so i could see if the values “seems to be the same” or not. If you want to see the second execution for the “async” option for example, just put a “2” at the end (e.g: iozonelinux-nfsv3-async2.xls). The “log” files are here too, you will know what need to be changed at the end (s/xls/log). I think the important part of the test is the tendency of the values, so don’t be very concerned about one or two isolated values. If you look at the beginning of the files (log or xls), you will see the command that i have used for the tests (e.g: iozone -azcR -U /mnt/solaris -f /mnt/solaris/testfile -b iozonesolaris-cache.xls). Don’t worry about the “names”, i have changed them to organize the results. The “mount options” were the same in all tests: “vers=3,intr,rsize=8192,wsize=8192“. I don’t know about any other “tunning” for XFS on Linux (except “noatime”), and the network transfer size “8192” was the best setting i got ( a long time ago :).

The paper “Analyzing NFS Client Performance with IOzone” by Don Capps and Tom McNeal, describes the iozone tests as follow:

“The benchmark will execute file IO to a broad range of file sizes, from 64Kbytes to 512Mbytes in the default test case, using record sizes ranging from 4Kbytes to 16Mbytes. For both files and records, each size increase will simply be a doubling of the previous value.”

And the tests are:

1) Sequential read/write – The records will be written to a new file, and read from an existing file, from beginning to end.

2) Sequential rewrite – The records will be rewritten to an existing file, from beginning to end.

3) Sequential reread – The records of a recently read file will be reread, from beginning to end. This usually results in better performance than the read, due to cached data.

4) Backwards read – The records will be read from the end of the file to the beginning. Few operating systems optimize file access according to this pattern, and some applications, such as MSC Nastrans, do this often.

5) Random read/write – The records, at random offsets will be written and read.

6) Record rewrite – A record will be rewritten in an existing file, starting at the beginning of the file.

7) Strided read – Records will be read at specific constant intervals, from beginning to end.

8 fread/fwrite – The fread() and fwrite() functions will be invoked to write to a new file, and read from an existing file, from beginning to end.

9) freread – The fread() function is invoked on a recently read file.

10) frewrite – The fwrite() function is invoked on an existing file.

11) pread/pwrite – The performance of pread() and pwrite() on systems that support these system calls will be measured.

Here there is more detailed information about each test.

The options that i have used in iozone are explained in the manual page:

-a Used to select full automatic mode. Produces output that covers all tested file operations for record sizes of 4k to 16M for file sizes of 64k to 512M.

-z Used in conjunction with -a to test all possible record sizes. Normally Iozone omits testing of small record sizes for very large files when used in full automatic mode. This option forces Iozone to include the small record sizes in the automatic tests also.

-c Include close() in the timing calculations. This is useful only if you suspect that close() is broken in the operating system currently under test. It can be useful for NFS Version 3 testing as well to help identify if the nfs3_commit is working well.

-R Generate Excel report.

-U mountpoint Mount point to unmount and remount between tests. Iozone will unmount and remount this mount point before beginning each test. This guarantees that the buffer cache does not contain any of the file under test.

Remember that iozone produces an output which is the number of bytes per second that the system could read/write to the test files.

Linux NFSv3 Server

The tests with Linux as the NFS server was very good, and with very few variations. We can see some diffs on the values between sync and async options, but the two executions for each one were pretty much the same values.

The results:

a) With sync export option.

b) With async export option.

SOME ASPECTS ABOUT THE RESULTS (Linux NFSv3 Server):

1) For this test (cache intensive), there was (almost) no difference between sync and async operations.

2) The read performance was constant: 10MB to 12MB. That is exactly the network speed, cache on the fileserver?

3) The write performance seems to be limited to the network bandwidth (even with async option), maybe because of the “limit” of “pending writes” on the client’s cache. What should make the async behave like sync (in average)…

4) Meta-data updates are synchronous in NFS, but the iozone’s test are not meta-data “intensive”, so i think the item “3” above is a better answer…

Solaris NFSv3 Server

For Solaris and ZFS i had to make a lot more test combinations. ZFS was designed to handle many “fail scenarios”, and so, trying to make a “fair” comparison, i need to think about the “quality” of the NFS service that was being provided to the client. As far as i know, the Solaris NFS service is sync by default, that’s why i did tests with sync and async export options on GNU/Linux. Another point was the EMC discs, and the ZFS cache flush feature. ZFS was designed to flush the disc cache and make sure that the data is on stable storage, what is not true for XFS. And for “cheap” discs that is a great feature, but for EMC is not (EMC cache is like “stable storage”). ZFS has compression capabilities, so i did some tests with that option enabled too. I think with that option the system would have a better response (less IO), with more CPU usage.

I did not even think about disable ZIL, if you want to know why, take a look here, here, and… here.

The Linux NFS servers were not reboot anytime during the tests, but the Solaris server was rebooted each time i need to change the setting in “/etc/system” configuration file (set zfs:zfs_nocacheflush=1 or 0). At least three times…

The results:

a) With the default Solaris configuration.

b) With the zfs_nocacheflush enabled.

c) Enabling the compression on the tested filesystem.

d) Using compression and without cache flush (zfs_nocacheflush enabled).

SOME ASPECTS ABOUT THE RESULTS (Solaris NFSv3 Server):

1) The write performance increases as file sizes increases (5MB to 10MB per second).

2) The read performance was constant: 10MB to 12MB. That is exactly the network speed, cache on the fileserver?

3) The better write performance was the network speed too (like Linux). Maybe the limitation is the protocol overhead (even for cache hits). I’m using the “-c” option to iozone, and so the nfs3_commit…

4) Could we tell that the NFS write performance will be “always” the network speed? (Ok, it’s early to say that, but would be nice to see how much it scales).

…)

Solaris iSCSI Target

Here are the results for the iSCSI protocol:

a) With the default Solaris configuration.

b) With the zfs_nocacheflush enabled.

c) Enabling the compression on the tested filesystem.

d) Using compression and without cache flush (zfs_nocacheflush enabled).

SOME ASPECTS ABOUT THE RESULTS (Solaris iSCSI Target):

1) The write performance was much better than any write performance using NFS (until 40x).

2) The write performance for files >= 128MB was not so good (but still better than NFS).

3) The read performance was constant too (like NFS): 10MB to 12MB.

4) The answer for the better write performance seems to be on the filesystem cache. On iSCSI the filesystem is on the initiator (client), and on NFS the filesystem cache is on the server.

5) In the XFS filesystem (or other modern filesystems), the meta-data operations are asynchronous, as well as data operations. This must be the great difference between iSCSI write performance over NFS.

6) The iSCSI protocol does not need to handle “share” between machines, the iSCSI block device is shared just for one machine. So, the NFS clients must exchange messages with the server to guarantee consistency of the data (even on a cache hit, in a read operation, for example).

Now let’s see the results for iozone’s tests with just two new arguments:

a) -n 2g minimum file size 2 giga.

b) -g 4g maximum file size 4 giga.

That must be sufficient to avoid any cache (the client memory is 1 giga).

Linux NFSv3 Server (2GB to 4GB)

The results:

a) With sync export option.

b) With async export option.

SOME ASPECTS ABOUT THE RESULTS (Linux NFSv3 Server – 2GB to 4GB):

1).

Solaris iSCSI Target (2GB to 4GB)

The results:

a) With the default Solaris configuration.

SOME ASPECTS ABOUT THE RESULTS (Solaris iSCSI Target – 2GB to 4GB):

1).

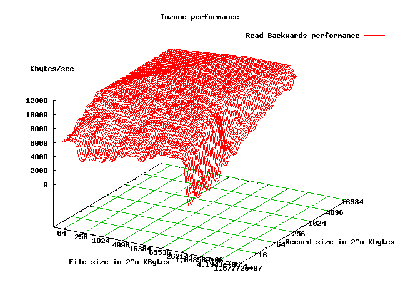

GRAPHS

I did find an usefull package to plot the data generated by the iozone utility (if i could find that package to make my monograph’s plots :).

Here are all the graphs:

1) Linux – NFSv3 and Solaris – NFSv3 (sync vs default)

2) Linux – NFSv3 (sync vs async)

3) Solaris – NFSv3 (compression vs compression/cache)

4) Solaris – iSCSI (compression vs compression/cache)

5) Solaris – iSCSI (default vs cache)

6) Solaris – iSCSI – 2GB to 4GB (default vs cache)

7) Linux – NFSv3 – 2GB to 4GB (sync vs async)

8) Solaris – iSCSI and Linux – NFSv3 – 2GB to 4GB (default vs sync)

9) Solaris – NFSv3 and Linux – NFSv3 – 2GB to 4GB (default vs sync)

“The read performance was constant: 10MB to 12MB. That is exactly the network speed, cache on the fileserver?”

ouch… – if this is true, then the network is the major bottleneck, and will hide pretty much of the interesting differences between the NFS Stacks and iSCSI [I dimly remember reading about linux iscsi solutions, too – would be interesting to test if my naive expectation of LESS DIFFERENCES performancewise of iSCSI stacks vs. NFS stacks holds true?]